Executive Summary

OpenAI's ChatGPT has recently gained lots of traction and significant popularity since it’s debut launch in November 2022. OpenAi's models, including ChatGPT, are widely used in a variety of applications, such as language translation, question-answering, and content generation. The models are also used by businesses, researchers, and developers to build new applications and services, and are integrated into a growing number of products and platforms.

Most recently, Microsoft announced it's new premium version of Microsoft Teams which is powered by the same AI that runs ChatGPT. The premium features include note taking and bulleting key takeaways from meetings. In a blog post, Microsoft said that "modern tools powered by AI hold the promise to boost individual, team, and organizational-level productivity and fundamentally change how we work."

We've all heard the saying "with great power comes great responsibility". Unfortunately, threat actor(s) have begun utilizing the AI for malicious purposes. From phishing templates to encryption tools, this sparks controversy and conversation to the modern cyber threat landscape.

Analysis

Check Point is a cybersecurity company that specializes in providing solutions for network, cloud, and mobile security. The company conducts research in various areas of cybersecurity, including AI and machine learning. Recently, the Check Point Research team found multiple instances of cybercriminals discussing the malicious use and benefits of ChatGPT. Three published use cases are detailed in the Check Point report here.

The first case discusses the creation of an infostealer by utilizing ChatGPT to recreate malware strains and techniques described in research publications about common malware. The stealer searches for 12 common file types including: Microsoft Office documents, PDFs, and images.

Case two was regarding the creation of a Python multi layer encryption tool. The threat actor, dubbed "USDoD", confirmed that OpenAI assisted in the script creation.

The third and final case discusses the facilitation of ChatGPT for fraud activity. Forum discussions highlighted the simplicity of creating a dark web marketplace. The marketplace is used to provide a platform for the automated trade of illegal or stolen goods including: accounts, payment cards, malware, drugs, and ammunition. Transactions and payments are processed via cryptocurrencies.

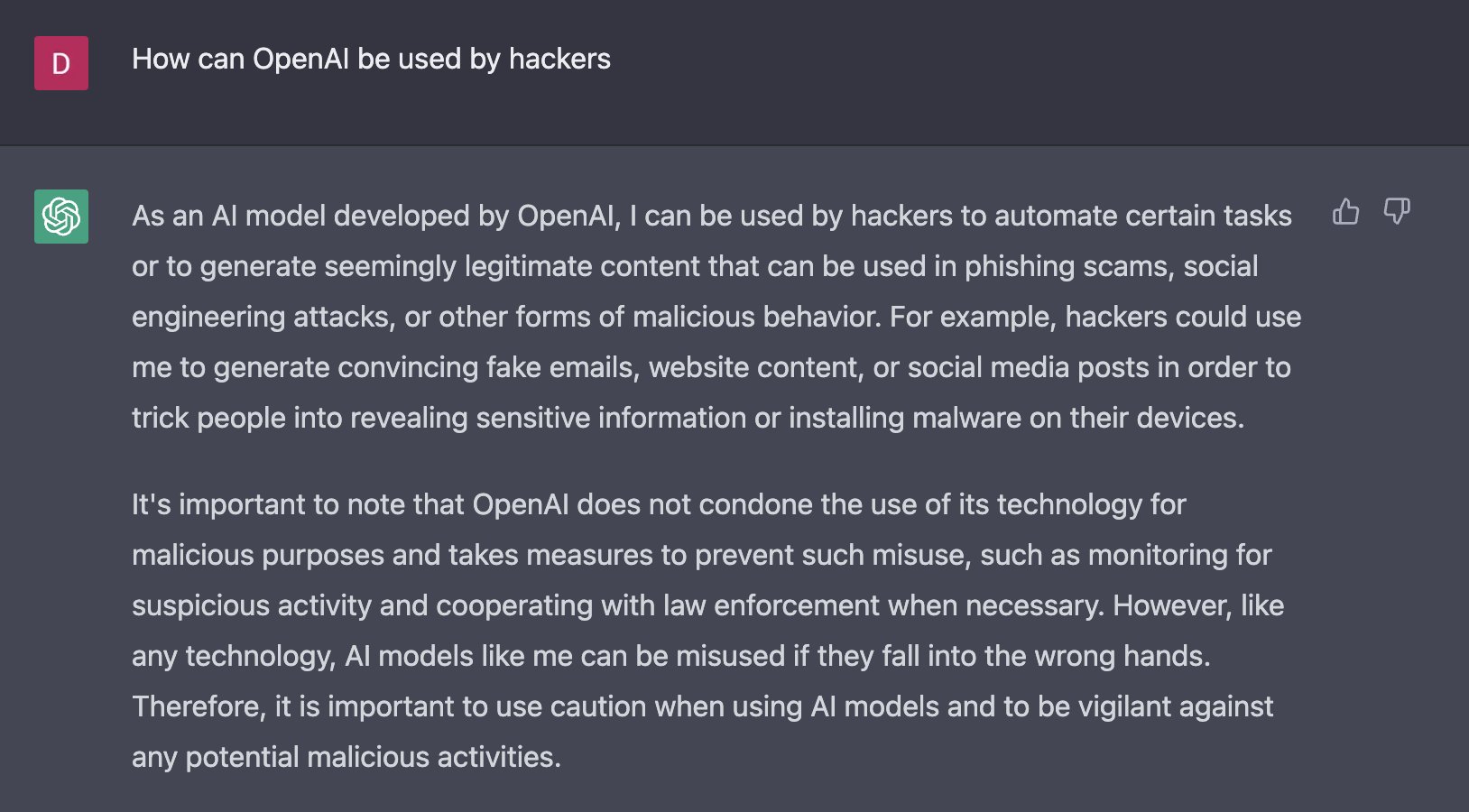

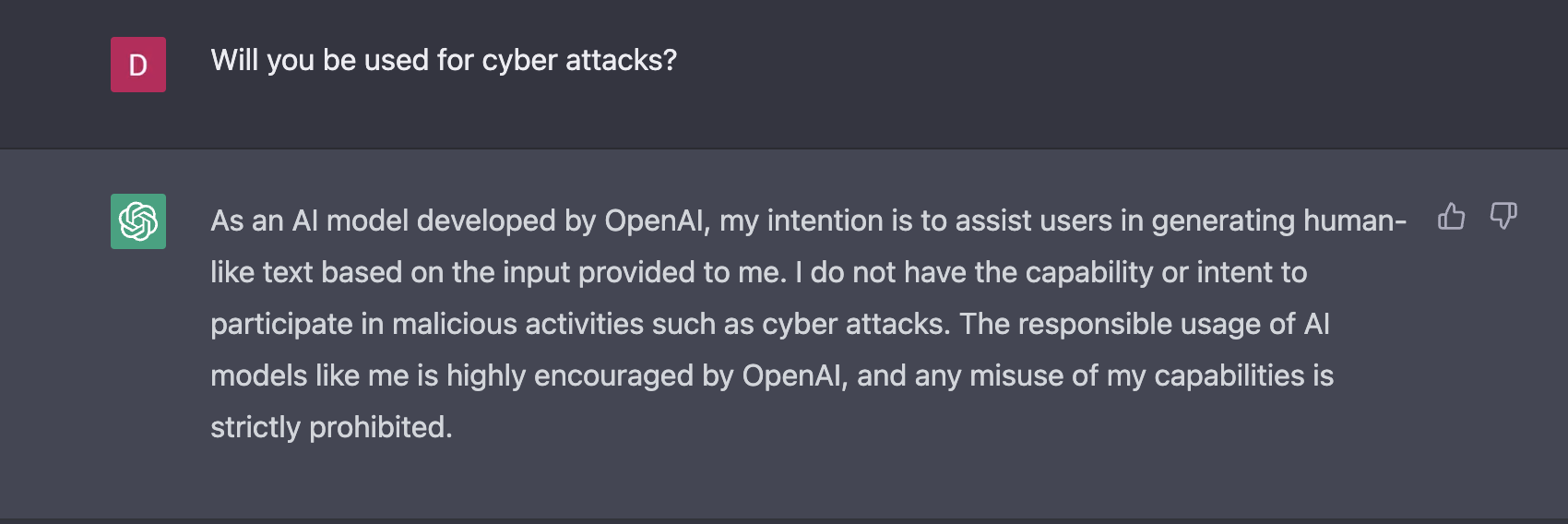

Following the review of Check Point's reports and additional open source intelligence (OSINT), I decided to try out ChatGPT for myself to understand how it deals with malicious adversaries, threat actors, and hackers today.

As a language model developed by OpenAI, ChatGPT does not have the ability to detect or respond to malicious activity as it's sole purpose is to generate text based on the input provided.

Conclusion

ChatGPT, as a language model developed by OpenAI, is a powerful tool that can provide users with a wide range of information and answer various questions. However, like any other AI technology, it also presents potential security risks that need to be addressed. The use of a language model like ChatGPT can potentially lead to the spread of misinformation or the exploitation of sensitive information. Therefore, it's essential to implement proper security measures, such as implementing access controls to restrict access to sensitive information and regularly monitoring and updating the model's training data to prevent malicious manipulation.

Additionally, it's also important for users to exercise caution when interacting with AI systems like ChatGPT, especially when it comes to sensitive information such as personal and financial data. It's always a good practice to verify the accuracy of the information provided by these systems and to not rely solely on AI systems for making important decisions. ChatGPT has the potential to be a valuable tool, but it's crucial to be aware of its security implications and to take appropriate measures to mitigate any risks.

References

1. Checkpoint Research. "OPWNAI : CYBERCRIMINALS STARTING TO USE CHATGPT", January 06, 2023. URL: https://research.checkpoint.com/2023/opwnai-cybercriminals-starting-to-use-chatgpt/

2. OpenAI. "ChatGPT: Optimizing Language Models for Dialogue", February 11, 2021. URL: https://openai.com/blog/chatgpt/

3. Microsoft. "Microsoft Teams Premium: Cut costs and add AI-powered productivity", February 1, 2023. URL: https://www.microsoft.com/en-us/microsoft-365/blog/2023/02/01/microsoft-teams-premium-cut-costs-and-add-ai-powered-productivity/

4. Sharma, Ax. Bleeping Computer. "OpenAI's new ChatGPT bot: 10 dangerous things it's capable of", December 6, 2022. URL: https://www.bleepingcomputer.com/news/technology/openais-new-chatgpt-bot-10-dangerous-things-its-capable-of/